This module describes in-loop filter algorithm in AV1. More details will be added.

◆ av1_pick_filter_level()

Algorithm for AV1 loop filter level selection.

This function determines proper filter levels used for in-loop filter (deblock filter).

- Parameters

-

| [in] | sd | The pointer of frame buffer |

| [in] | cpi | Top-level encoder structure |

| [in] | method | The method used to select filter levels |

- method includes:

LPF_PICK_FROM_FULL_IMAGE: Try the full image with different values. LPF_PICK_FROM_FULL_IMAGE_NON_DUAL: Try the full image filter search with non-dual filter only. LPF_PICK_FROM_SUBIMAGE: Try a small portion of the image with different values. LPF_PICK_FROM_Q: Estimate the level based on quantizer and frame type LPF_PICK_MINIMAL_LPF: Pick 0 to disable LPF if LPF was enabled last frame

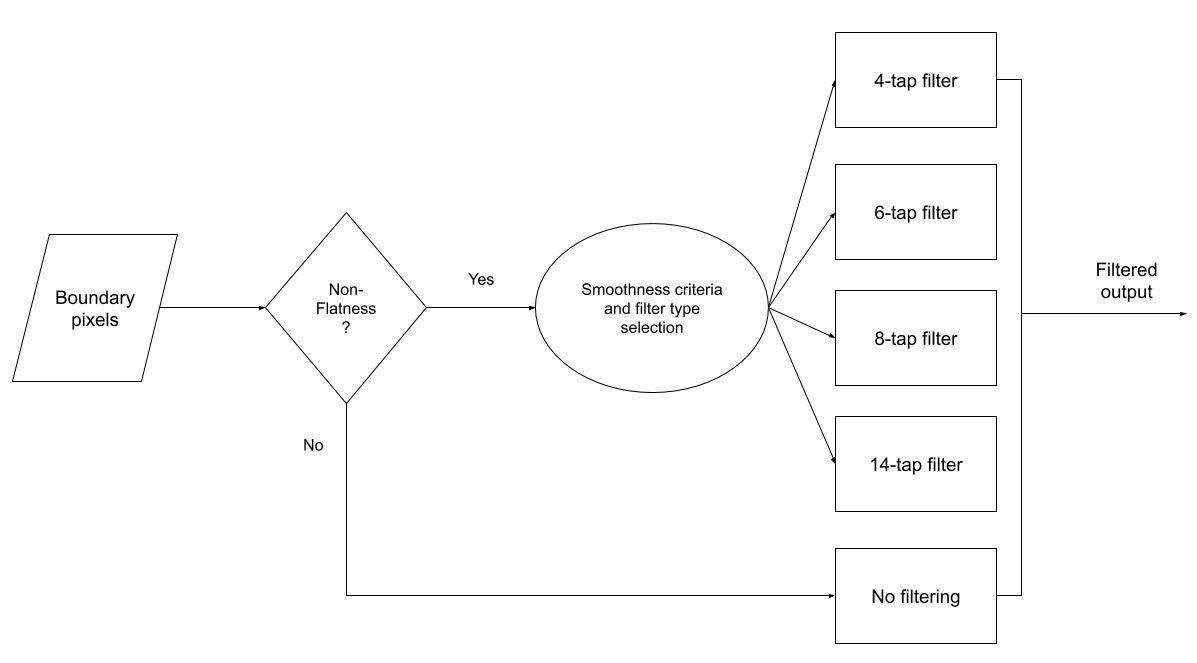

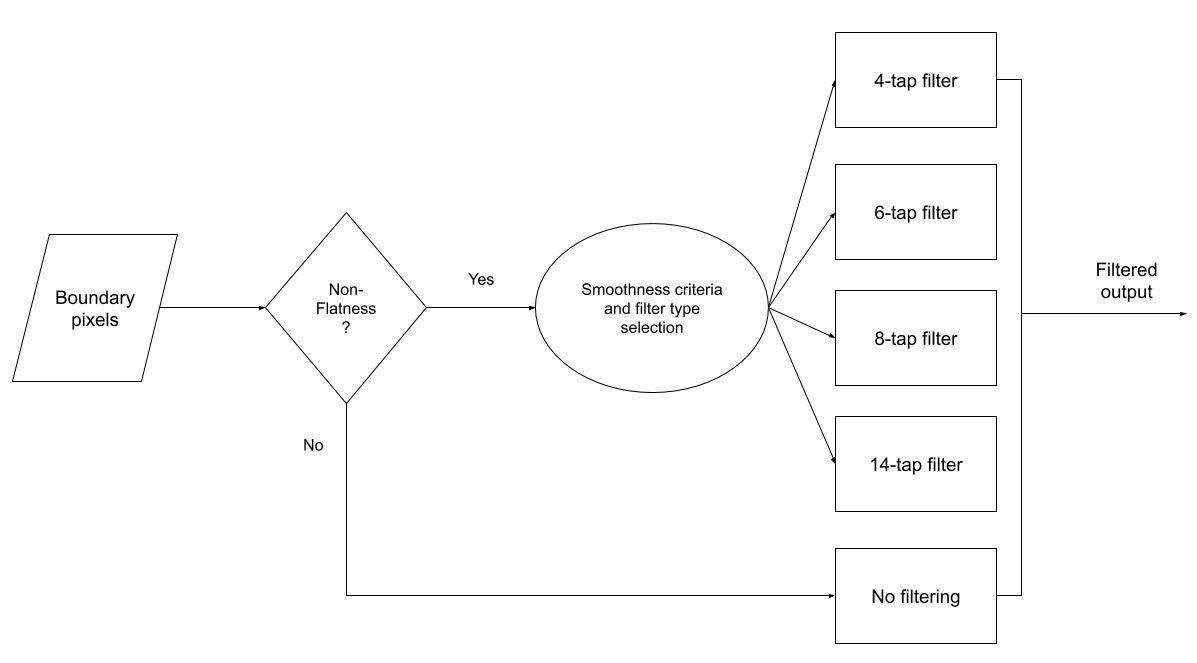

- The workflow of deblock filter is shown in Fig.1.

Boundary pixels pass through a non-flatness check, followed by a step that determines smoothness and selects proper types of filters (4-, 6-, 8-, 14-tap filter).

If non-flatness criteria is not satisfied, the encoder will not apply deblock filtering on these boundary pixels.

Fig.1. The workflow of deblock filter

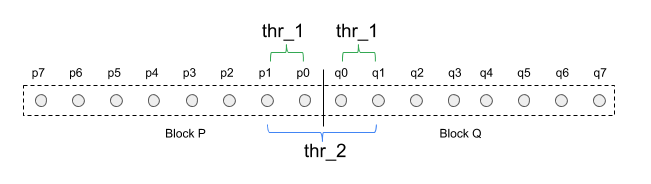

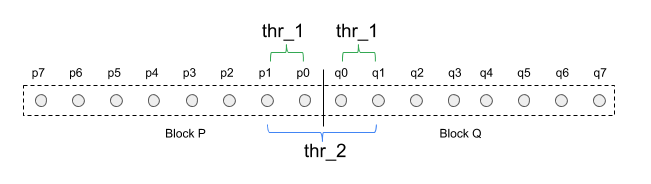

- The non-flatness is determined by the boundary pixels and thresholds as shown in Fig.2.

Filtering is applied when

\(|p_0-p_1|<thr_1\) and \(|q_0-q_1|<thr_1\) and \(2*|p_0-q_0|+|p_1-q_1|/2<thr_2\)

Fig.2. Non-flatness of pixel boundary

- Thresholds ("thr_1" and "thr_2") are determined by the filter level.

In AV1, for each frame, we employ the four filter levels, based on these observations:

Luma and chroma planes have different characteristics, including subsampling (different plane size), coding quality (chroma planes are better coded).

Therefore chroma planes need less deblocking filtering than luma plane.

In addition, content texture has different spatial characteristics: vertical and horizontal direction may need different level of filtering.

The selection of these filter levels is described in the following section.

- Algorithm

- The encoder selects filter levels given the current frame buffer, and the method.

By default, "LPF_PICK_FROM_FULL_IMAGE" is used, which should provide the most appropriate filter levels.

For video on demand (VOD) mode, if speed setting is larger than 5, "LPF_PICK_FROM_FULL_IMAGE_NON_DUAL" is used.

For real-time mode, if speed setting is larger than 5, "LPF_PICK_FROM_Q" is used.

- "LPF_PICK_FROM_FULL_IMAGE" method: determine filter levels sequentially by a filter level search procedure (function "search_filter_level").

The order is:

First search and determine the filter level for Y plane. Let vertical filter level (filter_level[0]) and the horizontal filter level (filter_level[1]) be equal to it.

Keep the horizontal filter level the same and search and determine the vertical filter level.

Search and determine the horizontal filter level.

Search and determine filter level for U plane.

Search and determine filter level for V plane.

- Search and determine filter level is fulfilled by function "search_filter_level".

It starts with a base filter level ("filt_mid") initialized by the corresponding last frame's filter level.

A filter step ("filter_step") is determined as: filter_step = filt_mid < 16 ? 4 : filt_mid / 4.

Then a modified binary search strategy is employed to find a proper filter level.

In each iteration, set filt_low = filt_mid - filter_step, filt_high = filt_mid + filter_step.

We now have three candidate levels, "filt_mid", "filt_low" and "filt_high".

Deblock filtering is applied on the current frame with candidate filter levels and the sum of squared error (SSE) between source and filtered frame is computed.

Set "filt_best" to the filter level of the smallest SSE. If "filter_best" equals to "filt_mid", halve the filter_step. Otherwise, set filt_mid = filt_best.

Go to the next iteration until "filter_step" is 0.

Note that in the comparison of SSEs between SSE[filt_low] and SSE[filt_mid], a "bias" is introduced to slightly raise the filter level.

It is based on the observation that low filter levels tend to yield a smaller SSE and produce a higher PSNR for the current frame,

while oversmoothing it and degradating the quality for prediction for future frames and leanding to a suboptimal performance overall.

Function "try_filter_frame" is the referrence for applying deblock filtering with a given filter level and computatition of SSE.

- "LPF_PICK_FROM_FULL_IMAGE_NON_DUAL" method: almost the same as "LPF_PICK_FROM_FULL_IMAGE",

just without separately searching for appropriate filter levels for vertical and horizontal filters.

- "LPF_PICK_FROM_Q" method: filter levels are determined by the quantization factor (q).

For 8 bit:

Keyframes: filt_guess = q * 0.06699 - 1.60817

Other frames: filt_guess = q * inter_frame_multiplier + 2.48225

inter_frame_multiplier = q > 700 ? 0.04590 : 0.02295

For 10 bit and 12 bit:

filt_guess = q * 0.316206 + 3.87252

Then filter_level[0] = filter_level[1] = filter_level_u = filter_level_v = clamp(filt_guess, min_filter_level, max_filter_level)

Where min_filter_level = 0, max_filter_level = 64

The equations were determined by linear fitting using filter levels generated by "LPF_PICK_FROM_FULL_IMAGE" method.

Referenced by loopfilter_frame().